Caching enables you to store data in memory for rapid access. When the data is accessed again, applications can get the data from the cache instead of retrieving it from the original source. This can improve performance and scalability. In addition, caching makes data available when the data source is temporarily unavailable.

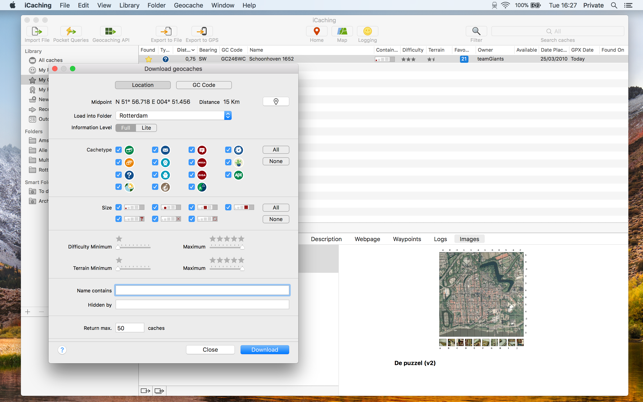

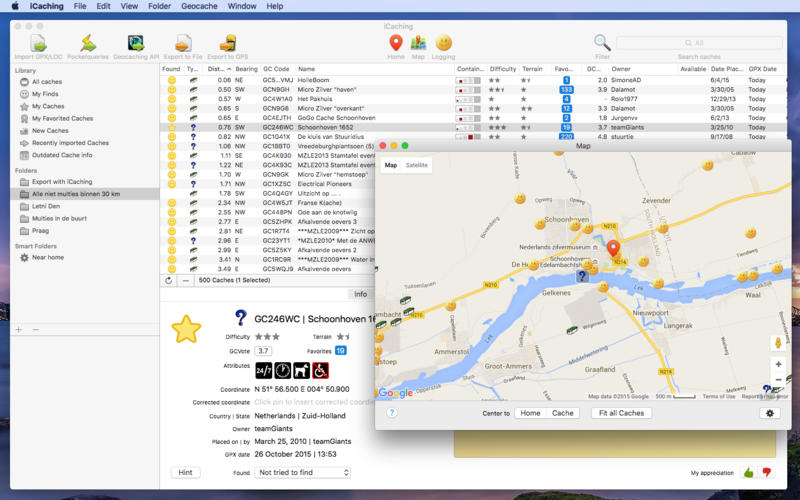

ICaching is the all-in-one Geocache manager for the Mac. It is a fast, native app with a real Mac userinterface like you’re used to from applications like iTunes. Available in the Mac App Store. ICaching is the all-in-one Geocache manager for the Mac.

In gpx files from GSAK and iCaching there is a information about the original coordinates included. So Looking4Cache recognizes that the coordinates are corrected. You can refresh the whole list (using the ‘All Data’ option) to see which geocaches have corrected coordinates. Geocaching is a treasure hunting game where you use a GPS to hide and seek containers with other participants in the activity. Geocaching.com is the listing service for geocaches around the world.

The .NET Framework provides caching functionality that you can use to improve the performance and scalability of both Windows client and server applications, including ASP.NET.

Note

In the .NET Framework 3.5 and earlier versions, ASP.NET provided an in-memory cache implementation in the System.Web.Caching namespace. In previous versions of the .NET Framework, caching was available only in the System.Web namespace and therefore required a dependency on ASP.NET classes. In the .NET Framework 4, the System.Runtime.Caching namespace contains APIs that are designed for both Web and non-Web applications.

Caching Data

You can cache information by using classes in the System.Runtime.Caching namespace. The caching classes in this namespace provide the following features:

Abstract types that provide the foundation for creating custom cache implementations.

A concrete in-memory object cache implementation.

The abstract base caching class (ObjectCache) defines the following caching tasks:

Creating and managing cache entries.

Specifying expiration and eviction information.

Triggering events that are raised in response to changes in cache entries.

The MemoryCache class is an in-memory object cache implementation of the ObjectCache class. You can use the MemoryCache class for most caching tasks.

Note

The MemoryCache class is modeled on the ASP.NET cache object that is defined in the System.Web.Caching namespace. Therefore, the internal caching logic similar to the logic that was provided in earlier versions of ASP.NET.

For an example of how to use to caching in a WPF application, see Walkthrough: Caching Application Data in a WPF Application.

Caching in ASP.NET Applications

The caching classes in the System.Runtime.Caching namespace provide functionality for caching data in ASP.NET.

Note

If your application targets the .NET Framework 3.5 or earlier, you must use the caching classes that are defined in the System.Web.Caching namespace. For more information, see ASP.NET Caching Overview.

Note

When you develop new applications, we recommend that you use the MemoryCache class. The API that is provided in the System.Runtime.Caching namespace is like the API that is provided in the Cache namespace. Therefore, the API will be familiar if you used caching in earlier versions of ASP.NET. For an example of how to use caching in ASP.NET applications, see Walkthrough: Caching Application Data in ASP.NET.

Output Caching

To manually cache application data, you can use the MemoryCache class in ASP.NET. ASP.NET also supports output caching, which stores the generated output of pages, controls, and HTTP responses in memory. You can configure output caching declaratively in an ASP.NET Web page or by using settings in the Web.config file. For more information, see outputCache Element for caching (ASP.NET Settings Schema).

ASP.NET lets you extend output caching by creating custom output-cache providers. By using custom providers, you can store cached content using other storage devices such as disks, cloud storage, and distributed cache engines. To create a custom output cache provider, you create a class that derives from the OutputCacheProvider class and configure the application to use the custom output cache provider.

Caching in WCF REST Services

For WCF REST services, the .NET Framework enables you to take advantage of the declarative output caching that is available in ASP.NET. This enables you to cache responses from your WCF REST service operations. When a user sends an HTTP GET request to a service that is configured for caching, ASP.NET sends back the cached response, and the service method is not called. After the cache expires, the next time that a user sends an HTTP GET request, your service method is called and the response is again cached.

The .NET Framework also enables you to implement conditional HTTP GET caching. In REST scenarios, a conditional HTTP GET request is often used by services to implement intelligent HTTP caching as described in the HTTP Specification. For more information, see Caching Support for WCF Web HTTP Services.

Extending Caching in the .NET Framework

Caching in the .NET Framework is designed to be extensible. The ObjectCache class enables you to create a custom cache implementation. This class provides members that are available to all managed applications, including Windows Forms, Windows Presentation Foundation (WPF), and Windows Communications Foundation (WCF). You might do this in order to create a cache class that uses a different storage mechanism, or if you want granular control over cache operations.

To extend caching you can do the following:

Create a custom class that derives from the ObjectCache class and then provide a custom cache implementation in the derived class.

Create a class that derives from MemoryCache class and customize or extend the derived class. For an example of how to do this, see Caching Application Data by Using Multiple Cache Objects in an ASP.NET Application.

Create a class that derives from the OutputCacheProvider class and configure the application to use the custom output cache provider.

For more information, see the entry Extensible Output Caching with ASP.NET 4 (VS 2010 and .NET Framework 4.0 Series) on Scott Guthrie's blog.

See also

-->By Rick Anderson, John Luo, and Steve Smith

View or download sample code (how to download)

Caching basics

Caching can significantly improve the performance and scalability of an app by reducing the work required to generate content. Caching works best with data that changes infrequently and is expensive to generate. Caching makes a copy of data that can be returned much faster than from the source. Apps should be written and tested to never depend on cached data.

ASP.NET Core supports several different caches. The simplest cache is based on the IMemoryCache. IMemoryCache represents a cache stored in the memory of the web server. Apps running on a server farm (multiple servers) should ensure sessions are sticky when using the in-memory cache. Sticky sessions ensure that subsequent requests from a client all go to the same server. For example, Azure Web apps use Application Request Routing (ARR) to route all subsequent requests to the same server.

Non-sticky sessions in a web farm require a distributed cache to avoid cache consistency problems. For some apps, a distributed cache can support higher scale-out than an in-memory cache. Using a distributed cache offloads the cache memory to an external process.

The in-memory cache can store any object. The distributed cache interface is limited to byte[]. The in-memory and distributed cache store cache items as key-value pairs.

System.Runtime.Caching/MemoryCache

System.Runtime.Caching/MemoryCache (NuGet package) can be used with:

- .NET Standard 2.0 or later.

- Any .NET implementation that targets .NET Standard 2.0 or later. For example, ASP.NET Core 2.0 or later.

- .NET Framework 4.5 or later.

Microsoft.Extensions.Caching.Memory/IMemoryCache (described in this article) is recommended over System.Runtime.Caching/MemoryCache because it's better integrated into ASP.NET Core. For example, IMemoryCache works natively with ASP.NET Core dependency injection.

Use System.Runtime.Caching/MemoryCache as a compatibility bridge when porting code from ASP.NET 4.x to ASP.NET Core.

Cache guidelines

- Code should always have a fallback option to fetch data and not depend on a cached value being available.

- The cache uses a scarce resource, memory. Limit cache growth:

- Do not use external input as cache keys.

- Use expirations to limit cache growth.

- Use SetSize, Size, and SizeLimit to limit cache size. The ASP.NET Core runtime does not limit cache size based on memory pressure. It's up to the developer to limit cache size.

Use IMemoryCache

Warning

Using a shared memory cache from Dependency Injection and calling SetSize, Size, or SizeLimit to limit cache size can cause the app to fail. When a size limit is set on a cache, all entries must specify a size when being added. This can lead to issues since developers may not have full control on what uses the shared cache. For example, Entity Framework Core uses the shared cache and does not specify a size. If an app sets a cache size limit and uses EF Core, the app throws an InvalidOperationException.When using SetSize, Size, or SizeLimit to limit cache, create a cache singleton for caching. For more information and an example, see Use SetSize, Size, and SizeLimit to limit cache size.A shared cache is one shared by other frameworks or libraries. For example, EF Core uses the shared cache and does not specify a size.

In-memory caching is a service that's referenced from an app using Dependency Injection. Request the IMemoryCache instance in the constructor:

The following code uses TryGetValue to check if a time is in the cache. If a time isn't cached, a new entry is created and added to the cache with Set. The CacheKeys class is part of the download sample.

The current time and the cached time are displayed:

The following code uses the Set extension method to cache data for a relative time without creating the MemoryCacheEntryOptions object.

The cached DateTime value remains in the cache while there are requests within the timeout period.

The following code uses GetOrCreate and GetOrCreateAsync to cache data.

Caching

The following code calls Get to fetch the cached time:

The following code gets or creates a cached item with absolute expiration:

A cached item set with a sliding expiration only is at risk of becoming stale. If it's accessed more frequently than the sliding expiration interval, the item will never expire. Combine a sliding expiration with an absolute expiration to guarantee that the item expires once its absolute expiration time passes. The absolute expiration sets an upper bound to how long the item can be cached while still allowing the item to expire earlier if it isn't requested within the sliding expiration interval. When both absolute and sliding expiration are specified, the expirations are logically ORed. If either the sliding expiration interval or the absolute expiration time pass, the item is evicted from the cache.

The following code gets or creates a cached item with both sliding and absolute expiration:

The preceding code guarantees the data will not be cached longer than the absolute time.

GetOrCreate, GetOrCreateAsync, and Get are extension methods in the CacheExtensions class. These methods extend the capability of IMemoryCache.

MemoryCacheEntryOptions

The following sample:

- Sets a sliding expiration time. Requests that access this cached item will reset the sliding expiration clock.

- Sets the cache priority to CacheItemPriority.NeverRemove.

- Sets a PostEvictionDelegate that will be called after the entry is evicted from the cache. The callback is run on a different thread from the code that removes the item from the cache.

Use SetSize, Size, and SizeLimit to limit cache size

A MemoryCache instance may optionally specify and enforce a size limit. The cache size limit does not have a defined unit of measure because the cache has no mechanism to measure the size of entries. If the cache size limit is set, all entries must specify size. The ASP.NET Core runtime does not limit cache size based on memory pressure. It's up to the developer to limit cache size. The size specified is in units the developer chooses.

For example:

- If the web app was primarily caching strings, each cache entry size could be the string length.

- The app could specify the size of all entries as 1, and the size limit is the count of entries.

If SizeLimit isn't set, the cache grows without bound. The ASP.NET Core runtime doesn't trim the cache when system memory is low. Apps must be architected to:

- Limit cache growth.

- Call Compact or Remove when available memory is limited:

The following code creates a unitless fixed size MemoryCache accessible by dependency injection:

SizeLimit does not have units. Cached entries must specify size in whatever units they deem most appropriate if the cache size limit has been set. All users of a cache instance should use the same unit system. An entry will not be cached if the sum of the cached entry sizes exceeds the value specified by SizeLimit. If no cache size limit is set, the cache size set on the entry will be ignored.

The following code registers MyMemoryCache with the dependency injection container.

MyMemoryCache is created as an independent memory cache for components that are aware of this size limited cache and know how to set cache entry size appropriately.

The following code uses MyMemoryCache:

The size of the cache entry can be set by Size or the SetSize extension methods:

MemoryCache.Compact

MemoryCache.Compact attempts to remove the specified percentage of the cache in the following order:

- All expired items.

- Items by priority. Lowest priority items are removed first.

- Least recently used objects.

- Items with the earliest absolute expiration.

- Items with the earliest sliding expiration.

Pinned items with priority NeverRemove are never removed. The following code removes a cache item and calls Compact:

See Compact source on GitHub for more information.

Cache dependencies

The following sample shows how to expire a cache entry if a dependent entry expires. A CancellationChangeToken is added to the cached item. When Cancel is called on the CancellationTokenSource, both cache entries are evicted.

Using a CancellationTokenSource allows multiple cache entries to be evicted as a group. With the using pattern in the code above, cache entries created inside the using block will inherit triggers and expiration settings.

Additional notes

- Expiration doesn't happen in the background. There is no timer that actively scans the cache for expired items. Any activity on the cache (

Get,Set,Remove) can trigger a background scan for expired items. A timer on theCancellationTokenSource(CancelAfter) also removes the entry and triggers a scan for expired items. The following example uses CancellationTokenSource(TimeSpan) for the registered token. When this token fires it removes the entry immediately and fires the eviction callbacks:

When using a callback to repopulate a cache item:

- Multiple requests can find the cached key value empty because the callback hasn't completed.

- This can result in several threads repopulating the cached item.

When one cache entry is used to create another, the child copies the parent entry's expiration tokens and time-based expiration settings. The child isn't expired by manual removal or updating of the parent entry.

Use PostEvictionCallbacks to set the callbacks that will be fired after the cache entry is evicted from the cache.

For most apps,

IMemoryCacheis enabled. For example, callingAddMvc,AddControllersWithViews,AddRazorPages,AddMvcCore().AddRazorViewEngine, and many otherAdd{Service}methods inConfigureServices, enablesIMemoryCache. For apps that are not calling one of the precedingAdd{Service}methods, it may be necessary to call AddMemoryCache inConfigureServices.

Background cache update

Use a background service such as IHostedService to update the cache. The background service can recompute the entries and then assign them to the cache only when they’re ready.

Additional resources

By Rick Anderson, John Luo, and Steve Smith

View or download sample code (how to download)

Caching basics

Caching can significantly improve the performance and scalability of an app by reducing the work required to generate content. Caching works best with data that changes infrequently. Caching makes a copy of data that can be returned much faster than from the original source. Code should be written and tested to never depend on cached data.

ASP.NET Core supports several different caches. The simplest cache is based on the IMemoryCache, which represents a cache stored in the memory of the web server. Apps that run on a server farm (multiple servers) should ensure that sessions are sticky when using the in-memory cache. Sticky sessions ensure that later requests from a client all go to the same server. For example, Azure Web apps use Application Request Routing (ARR) to route all requests from a user agent to the same server.

Non-sticky sessions in a web farm require a distributed cache to avoid cache consistency problems. For some apps, a distributed cache can support higher scale-out than an in-memory cache. Using a distributed cache offloads the cache memory to an external process.

The in-memory cache can store any object. The distributed cache interface is limited to byte[]. The in-memory and distributed cache store cache items as key-value pairs.

System.Runtime.Caching/MemoryCache

System.Runtime.Caching/MemoryCache (NuGet package) can be used with:

- .NET Standard 2.0 or later.

- Any .NET implementation that targets .NET Standard 2.0 or later. For example, ASP.NET Core 2.0 or later.

- .NET Framework 4.5 or later.

Microsoft.Extensions.Caching.Memory/IMemoryCache (described in this article) is recommended over System.Runtime.Caching/MemoryCache because it's better integrated into ASP.NET Core. For example, IMemoryCache works natively with ASP.NET Core dependency injection.

Use System.Runtime.Caching/MemoryCache as a compatibility bridge when porting code from ASP.NET 4.x to ASP.NET Core.

Cache guidelines

- Code should always have a fallback option to fetch data and not depend on a cached value being available.

- The cache uses a scarce resource, memory. Limit cache growth:

- Do not use external input as cache keys.

- Use expirations to limit cache growth.

- Use SetSize, Size, and SizeLimit to limit cache size. The ASP.NET Core runtime does not limit cache size based on memory pressure. It's up to the developer to limit cache size.

Using IMemoryCache

Warning

Using a shared memory cache from Dependency Injection and calling SetSize, Size, or SizeLimit to limit cache size can cause the app to fail. When a size limit is set on a cache, all entries must specify a size when being added. This can lead to issues since developers may not have full control on what uses the shared cache. For example, Entity Framework Core uses the shared cache and does not specify a size. If an app sets a cache size limit and uses EF Core, the app throws an InvalidOperationException.When using SetSize, Size, or SizeLimit to limit cache, create a cache singleton for caching. For more information and an example, see Use SetSize, Size, and SizeLimit to limit cache size.

In-memory caching is a service that's referenced from your app using Dependency Injection. Call AddMemoryCache in ConfigureServices:

Request the IMemoryCache instance in the constructor:

IMemoryCache requires NuGet package Microsoft.Extensions.Caching.Memory, which is available in the Microsoft.AspNetCore.App metapackage.

The following code uses TryGetValue to check if a time is in the cache. If a time isn't cached, a new entry is created and added to the cache with Set.

Caching

The current time and the cached time are displayed:

The cached DateTime value remains in the cache while there are requests within the timeout period. The following image shows the current time and an older time retrieved from the cache:

The following code uses GetOrCreate and GetOrCreateAsync to cache data.

The following code calls Get to fetch the cached time:

GetOrCreate , GetOrCreateAsync, and Get are extension methods part of the CacheExtensions class that extends the capability of IMemoryCache. See IMemoryCache methods and CacheExtensions methods for a description of other cache methods.

MemoryCacheEntryOptions

The following sample:

- Sets a sliding expiration time. Requests that access this cached item will reset the sliding expiration clock.

- Sets the cache priority to

CacheItemPriority.NeverRemove. - Sets a PostEvictionDelegate that will be called after the entry is evicted from the cache. The callback is run on a different thread from the code that removes the item from the cache.

Use SetSize, Size, and SizeLimit to limit cache size

A MemoryCache instance may optionally specify and enforce a size limit. The cache size limit does not have a defined unit of measure because the cache has no mechanism to measure the size of entries. If the cache size limit is set, all entries must specify size. The ASP.NET Core runtime does not limit cache size based on memory pressure. It's up to the developer to limit cache size. The size specified is in units the developer chooses.

For example:

- If the web app was primarily caching strings, each cache entry size could be the string length.

- The app could specify the size of all entries as 1, and the size limit is the count of entries.

If SizeLimit is not set, the cache grows without bound. The ASP.NET Core runtime does not trim the cache when system memory is low. Apps much be architected to:

- Limit cache growth.

- Call Compact or Remove when available memory is limited:

The following code creates a unitless fixed size MemoryCache accessible by dependency injection:

SizeLimit does not have units. Cached entries must specify size in whatever units they deem most appropriate if the cache size limit has been set. All users of a cache instance should use the same unit system. An entry will not be cached if the sum of the cached entry sizes exceeds the value specified by SizeLimit. If no cache size limit is set, the cache size set on the entry will be ignored.

The following code registers MyMemoryCache with the dependency injection container.

MyMemoryCache is created as an independent memory cache for components that are aware of this size limited cache and know how to set cache entry size appropriately.

The following code uses MyMemoryCache:

The size of the cache entry can be set by Size or the SetSize extension method:

MemoryCache.Compact

MemoryCache.Compact attempts to remove the specified percentage of the cache in the following order:

- All expired items.

- Items by priority. Lowest priority items are removed first.

- Least recently used objects.

- Items with the earliest absolute expiration.

- Items with the earliest sliding expiration.

Pinned items with priority NeverRemove are never removed.

See Compact source on GitHub for more information.

Cache dependencies

The following sample shows how to expire a cache entry if a dependent entry expires. A CancellationChangeToken is added to the cached item. When Cancel is called on the CancellationTokenSource, both cache entries are evicted.

Using a CancellationTokenSource allows multiple cache entries to be evicted as a group. With the using pattern in the code above, cache entries created inside the using block will inherit triggers and expiration settings.

Additional notes

When using a callback to repopulate a cache item:

- Multiple requests can find the cached key value empty because the callback hasn't completed.

- This can result in several threads repopulating the cached item.

When one cache entry is used to create another, the child copies the parent entry's expiration tokens and time-based expiration settings. The child isn't expired by manual removal or updating of the parent entry.

Use PostEvictionCallbacks to set the callbacks that will be fired after the cache entry is evicted from the cache.

Caching_sha2_password

Background cache update

Use a background service such as IHostedService to update the cache. The background service can recompute the entries and then assign them to the cache only when they’re ready.

Additional resources